Publications

2026

- ASReview Dory: Bringing new and exciting models to ASReview LABSoftware Impacts, 2026

Systematic reviewing is a time-consuming process which can be accelerated through screening prioritisation via active learning. ASReview Dory enables researchers to test, validate, and apply a wide range of embedders and classifiers in systematic literature screening. It extends ASReview LAB, an open source, lightweight, and user-friendly environment with proven default models and extensibility through Python entry points. ASReview Dory adds ready-to-use transformer-based embedders, neural classifiers, and a framework for integrating custom models. Once installed, these models are directly available in ASReview LAB without additional configuration and can be systematically evaluated using the API or ASReview Makita.

@article{VANDERKUIL2026100809, title = {ASReview Dory: Bringing new and exciting models to ASReview LAB}, journal = {Software Impacts}, volume = {27}, pages = {100809}, year = {2026}, issn = {2665-9638}, doi = {https://doi.org/10.1016/j.simpa.2025.100809}, url = {https://www.sciencedirect.com/science/article/pii/S2665963825000697}, author = {{van der Kuil}, Timo and {Teijema}, Jelle Jasper and {de Bruin}, Jonathan and {van de Schoot}, Rens}, keywords = {Systematic reviews, Machine learning, Natural language processing, Reproducibility, ASReview} }

2025

- ASReview LAB v.2: Open-source text screening with multiple agents and a crowd of expertsJonathan de Bruin, Peter Lombaers, Casper Kaandorp, Jelle Teijema, Timo van der Kuil, Berke Yazan, Angie Dong, and Rens van de SchootPatterns, 2025

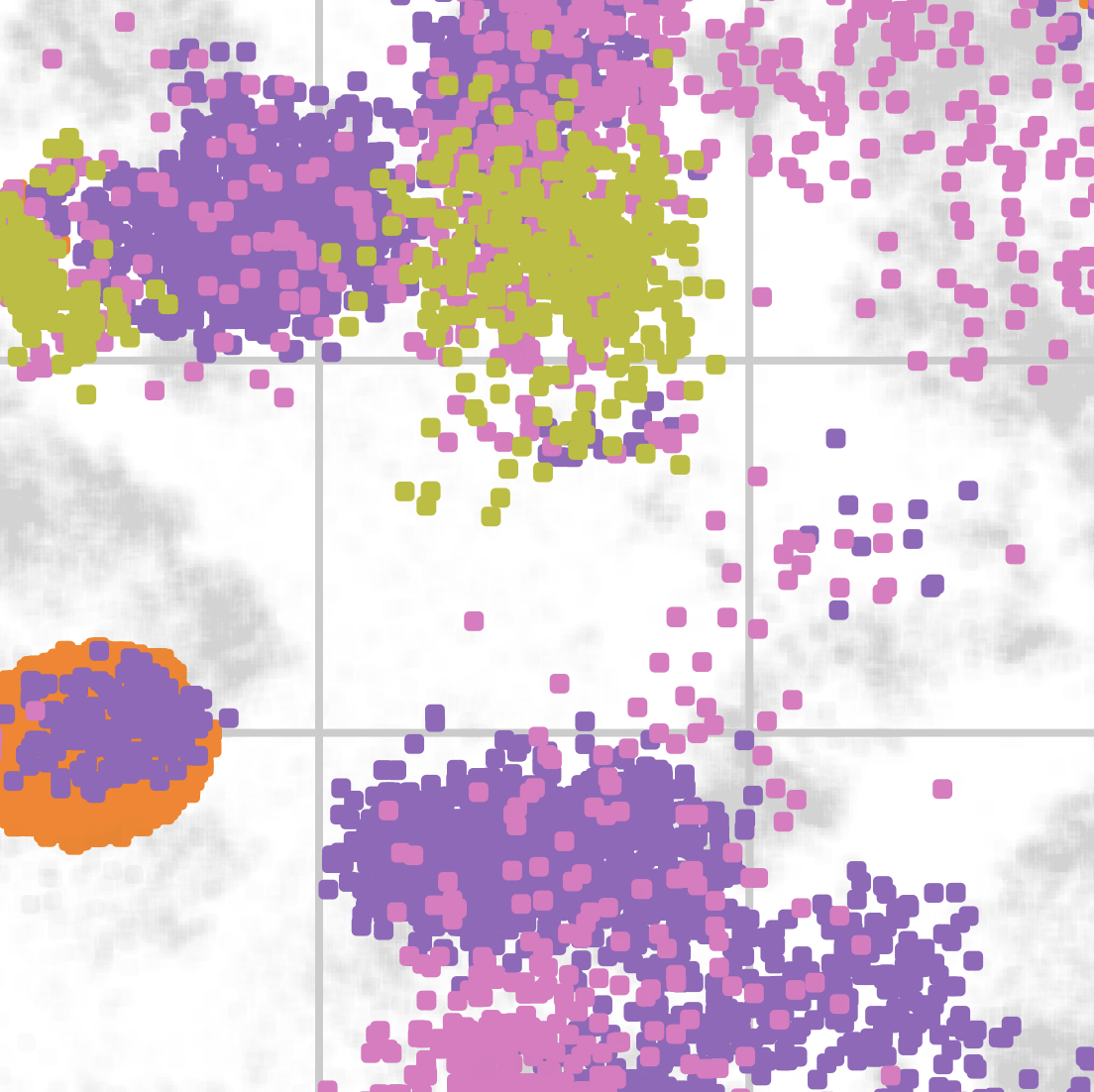

ASReview LAB v.2 introduces an advancement in AI-assisted systematic reviewing by enabling collaborative screening with multiple experts (“a crowd of oracles”) using a shared AI model. The platform supports multiple AI agents within the same project, allowing users to switch between fast general-purpose models and domain-specific, semantic, or multilingual transformer models. Leveraging the SYNERGY benchmark dataset, performance has improved significantly, showing a 24.1% reduction in loss compared to version 1 through model improvements and hyperparameter tuning. ASReview LAB v.2 follows user-centric design principles and offers reproducible, transparent workflows. It logs key configuration and annotation data while balancing full model traceability with efficient storage. Future developments include automated model switching based on performance metrics, noise-robust learning, and ensemble-based decision-making.

@article{bruin2025asreview, title = {ASReview LAB v.2: Open-source text screening with multiple agents and a crowd of experts}, journal = {Patterns}, pages = {101318}, year = {2025}, issn = {2666-3899}, doi = {https://doi.org/10.1016/j.patter.2025.101318}, url = {https://www.sciencedirect.com/science/article/pii/S2666389925001667}, author = {{de Bruin}, Jonathan and Lombaers, Peter and Kaandorp, Casper and Teijema, Jelle and {van der Kuil}, Timo and Yazan, Berke and Dong, Angie and {van de Schoot}, Rens} }